Basic Configuration

Azure Portal Configurations:

Make sure you have done the following settings while creating Azure Blob Storage.

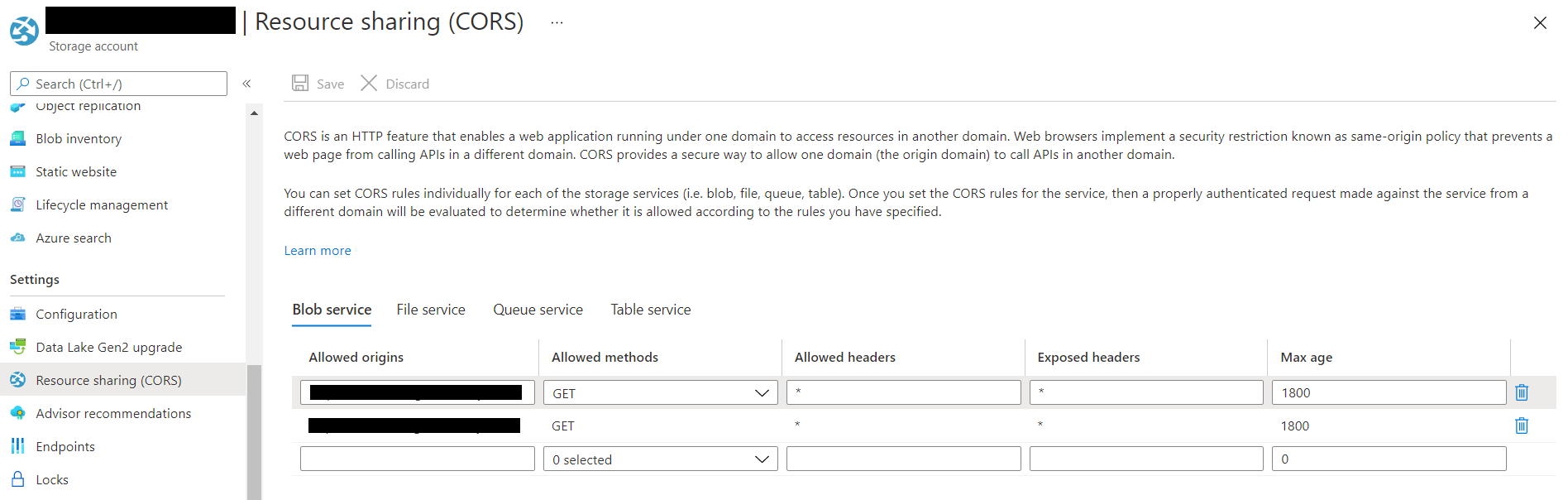

- Add your website in the Storage Account > Settings > Resource sharing (CORS)’s Allowed origins as follows.

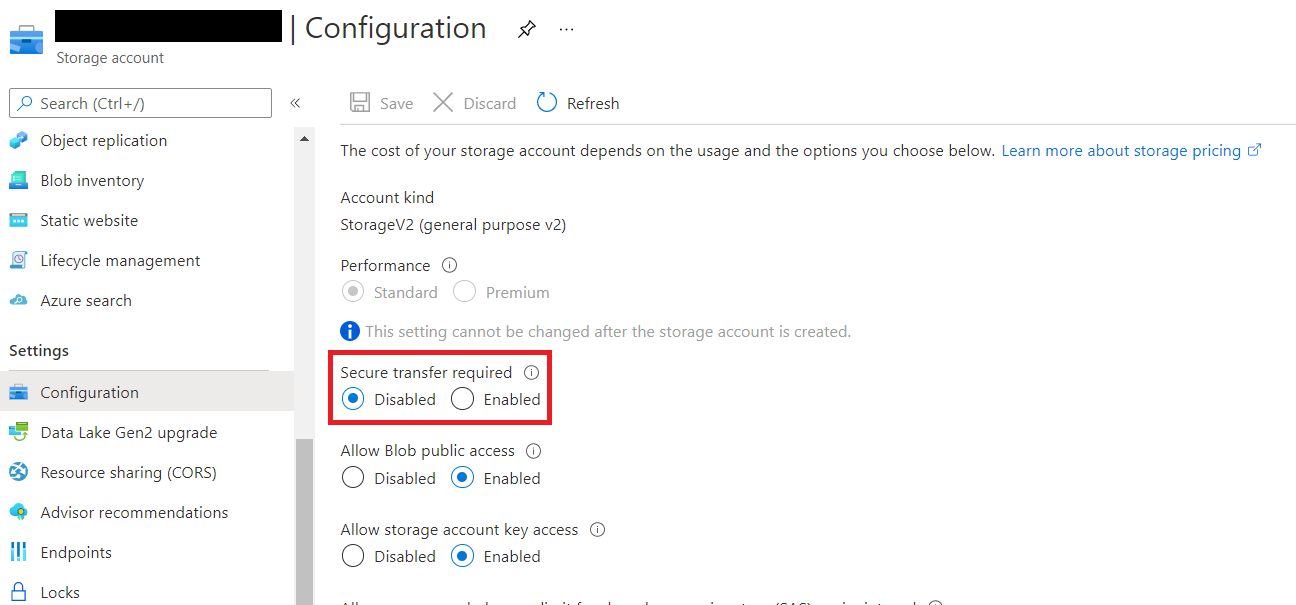

- If your website is on HTTP instead of HTTPS make sure that the “Secure transfer required” is Disabled from Storage Account > Settings > Configuration.

Edit the YAML files:

Define the following keys in the /edx/etc/lms.yml and /edx/etc/studio.yml files:

AZURE_ACCOUNT_NAME: [YOUR_AZURE_ACCOUNT_NAME] AZURE_ACCOUNT_KEY: [YOUR_AZURE_ACCOUNT_KEY] AZURE_CONTAINER: [YOUR_AZURE_CONTAINER_NAME] REMOTE_FILE_STORAGE_STRING: 'openedx.core.storage.AzureStorageExtended' LOCAL_FILE_STORAGE_STRING: 'django.core.files.storage.FileSystemStorage'Define the following key only in the /edx/etc/lms.yml files:

AZURE_LOCATION: '' SERVICE_NAME: LMSDefine the following key only in the /edx/etc/studio.yml file:

AZURE_LOCATION: studio SERVICE_NAME: CMS

Install the Azure Blob Storage using the following commands:

$ pip install azure-storage-blob==2.0.1

$ pip install azure-storage-file==2.0.1

$ pip install azure-storage-queue==2.0.1

$ pip install azure-nspkg==3.0.2

Now, modify the following files accordingly.

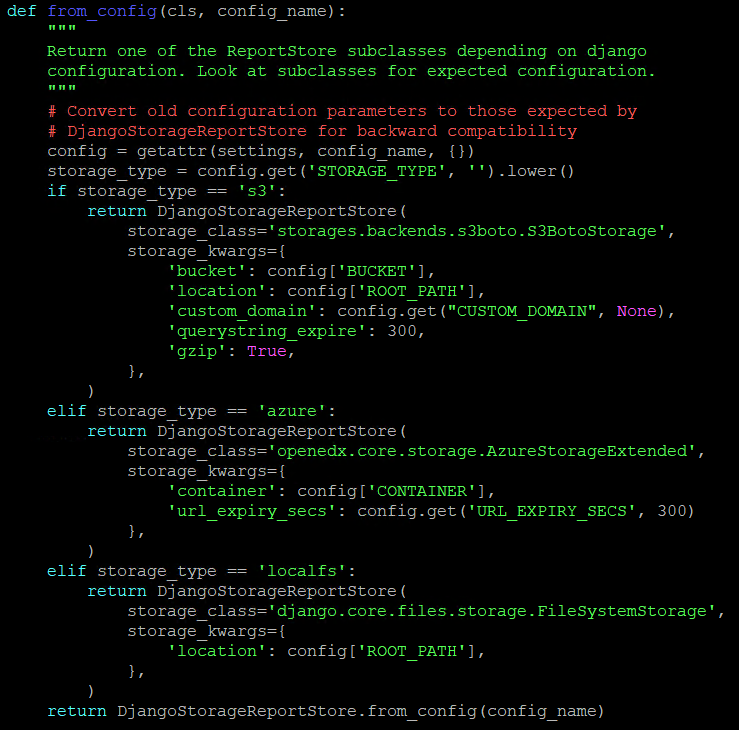

edx-platform/lms/djangoapps/instructor_task/models.py (Class: ReportStore, Function: from_config)

Add the following piece of code in the above mentioned file:

elif storage_type == 'azure': return DjangoStorageReportStore( storage_class='openedx.core.storage.AzureStorageExtended', storage_kwargs={ 'container': config['CONTAINER'], 'url_expiry_secs': config.get('URL_EXPIRY_SECS', 300) }, )Screenshot for Reference:

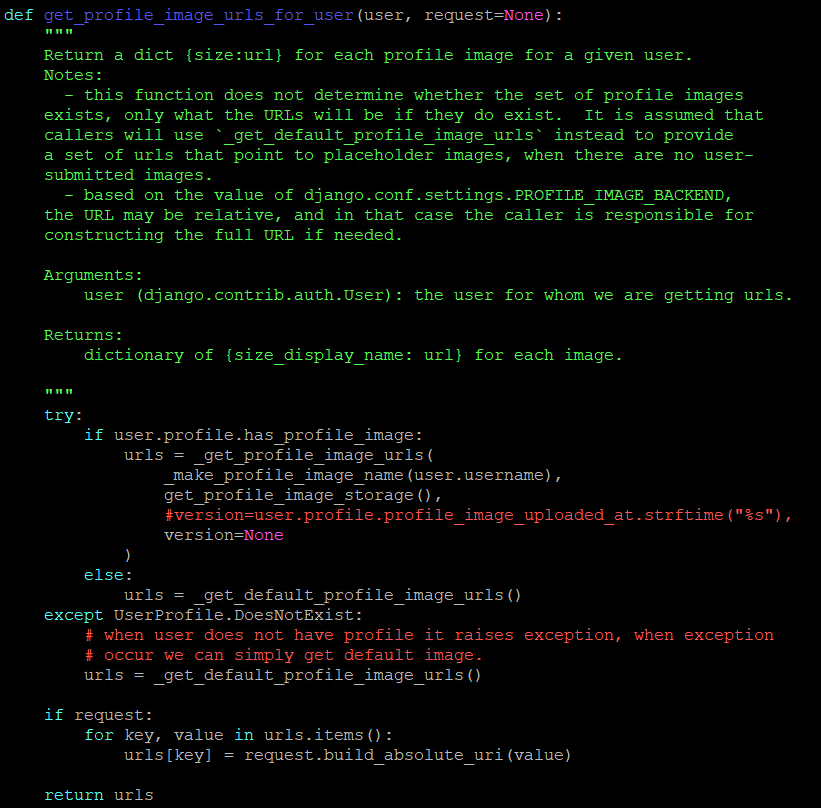

edx-platform/openedx/core/djangoapps/user_api/accounts/image_helpers.py (Function: get_profile_image_urls_for_user)

Add the following piece of code in the above mentioned file:

version=NoneInstead of the following piece of code:

version=user.profile.profile_image_uploaded_at.strftime("%s"),Screenshot for Reference:

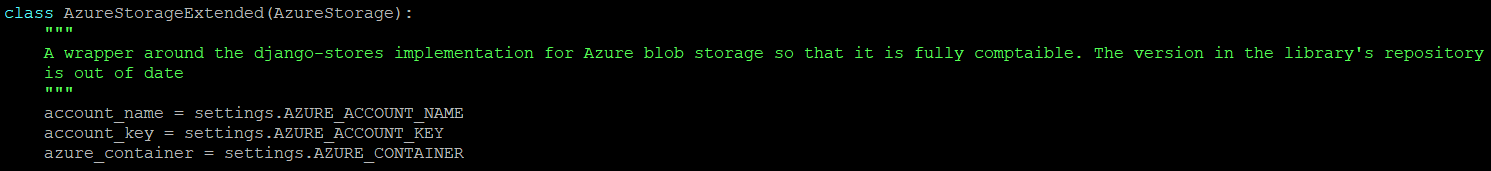

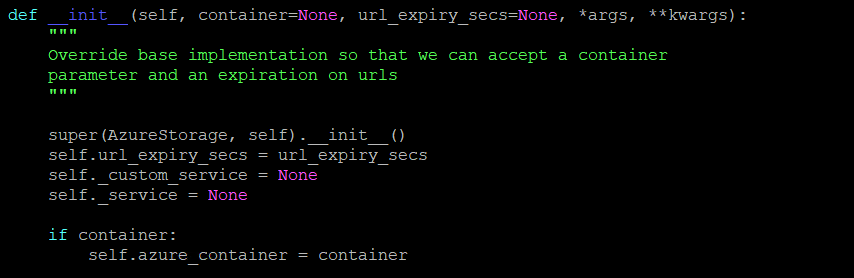

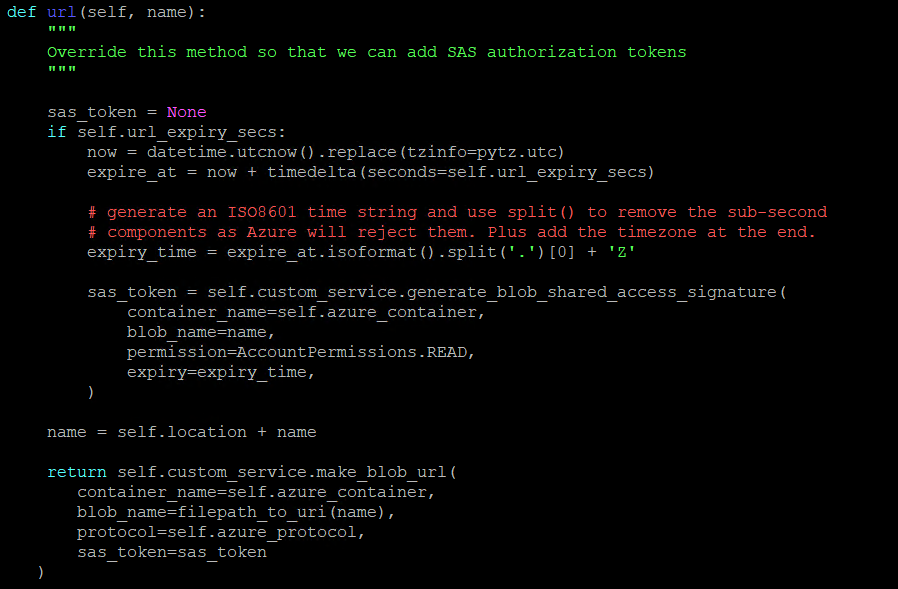

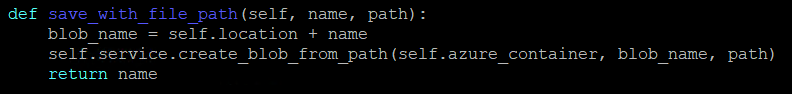

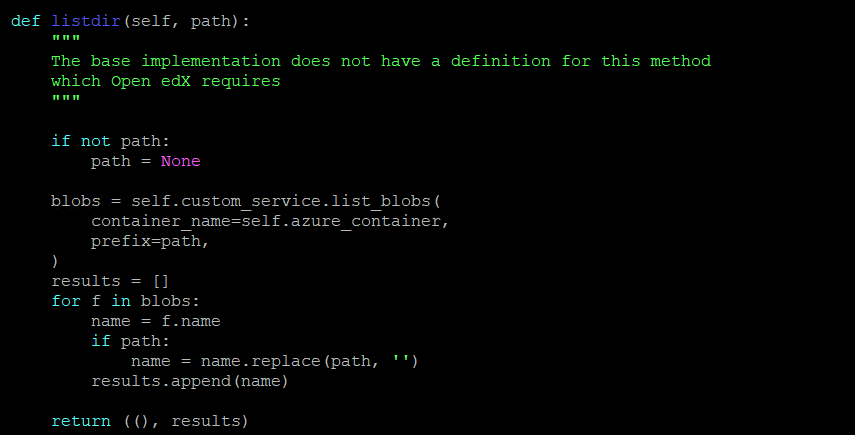

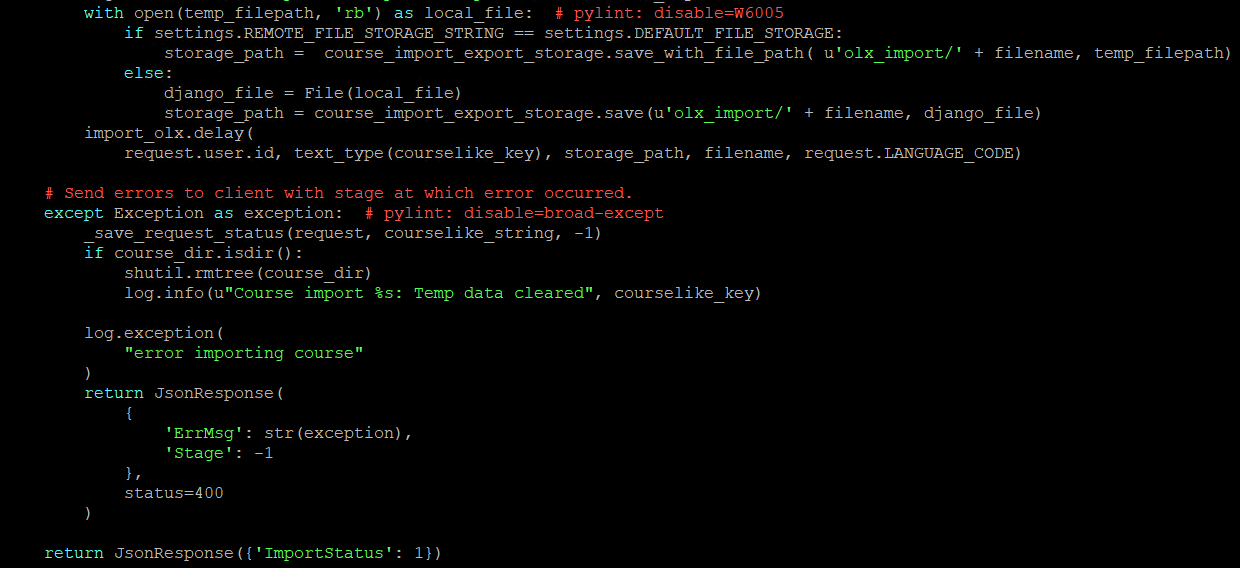

edx-platform/openedx/core/storage.py.

Add the following piece of code in the above mentioned file:

from storages.backends.azure_storage import AzureStorage from django.utils.encoding import filepath_to_uri class AzureStorageExtended(AzureStorage): """ A wrapper around the django-stores implementation for Azure blob storage so that it is fully compatible. The version in the library's repository is out of date """ account_name = settings.AZURE_ACCOUNT_NAME account_key = settings.AZURE_ACCOUNT_KEY azure_container = settings.AZURE_CONTAINER location = settings.AZURE_LOCATION + "/" if settings.AZURE_LOCATION != '' else '' def __init__(self, container=None, url_expiry_secs=None, *args, **kwargs): """ Override base implementation so that we can accept a container parameter and an expiration on urls """ super(AzureStorage, self).__init__() self.url_expiry_secs = url_expiry_secs self._custom_service = None self._service = None if container: self.azure_container = container def url(self, name): """ Override this method so that we can add SAS authorization tokens """ sas_token = None if self.url_expiry_secs: now = datetime.utcnow().replace(tzinfo=pytz.utc) expire_at = now + timedelta(seconds=self.url_expiry_secs) # generate an ISO8601 time string and use split() to remove the sub-second # components as Azure will reject them. Plus add the timezone at the end. expiry_time = expire_at.isoformat().split('.')[0] + 'Z' sas_token = self.custom_service.generate_blob_shared_access_signature( container_name=self.azure_container, blob_name=name, permission=AccountPermissions.READ, expiry=expiry_time, ) name = self.location + name return self.custom_service.make_blob_url( container_name=self.azure_container, blob_name=filepath_to_uri(name), protocol=self.azure_protocol, sas_token=sas_token ) def save_with_file_path(self, name, path): blob_name = self.location + name self.service.create_blob_from_path(self.azure_container, blob_name, path) return name def listdir(self, path): """ The base implementation does not have a definition for this method which Open edX requires """ if not path: path = None blobs = self.custom_service.list_blobs( container_name=self.azure_container, prefix=self.location + path, ) results = [] for f in blobs: name = f.name if path: name = name.replace(path, '') results.append(name) return ((), results)Screenshot for Reference:

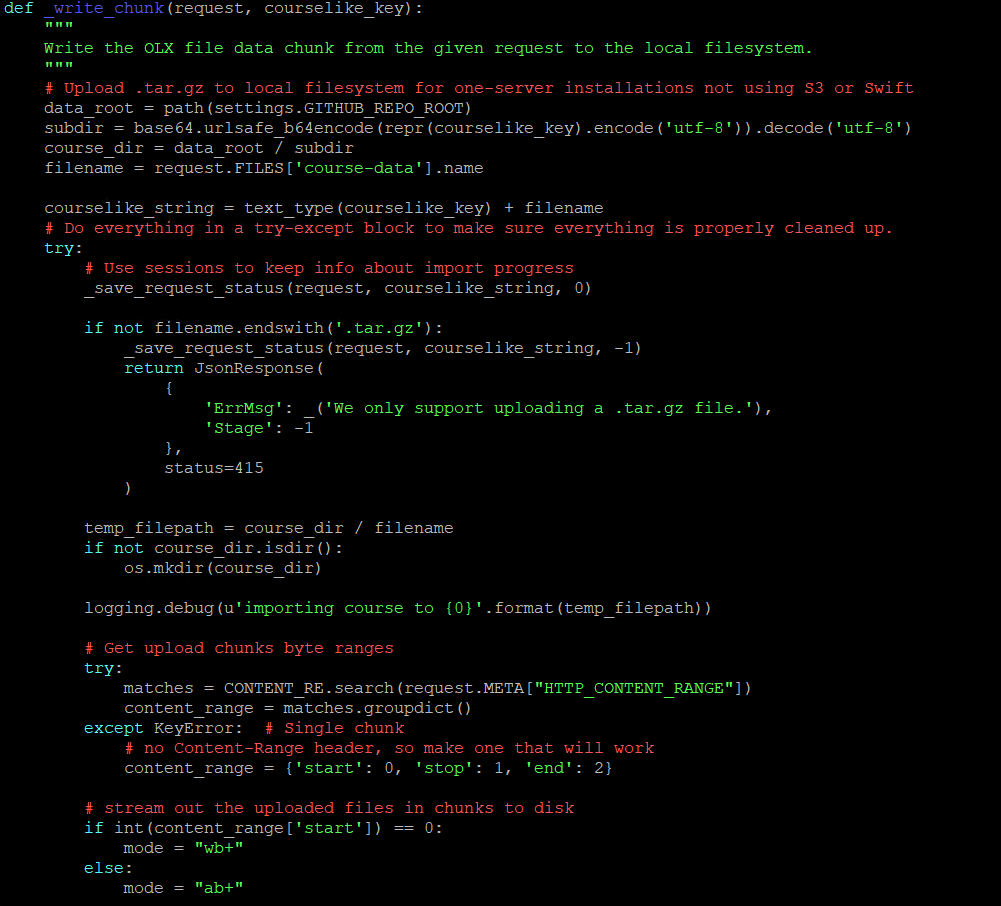

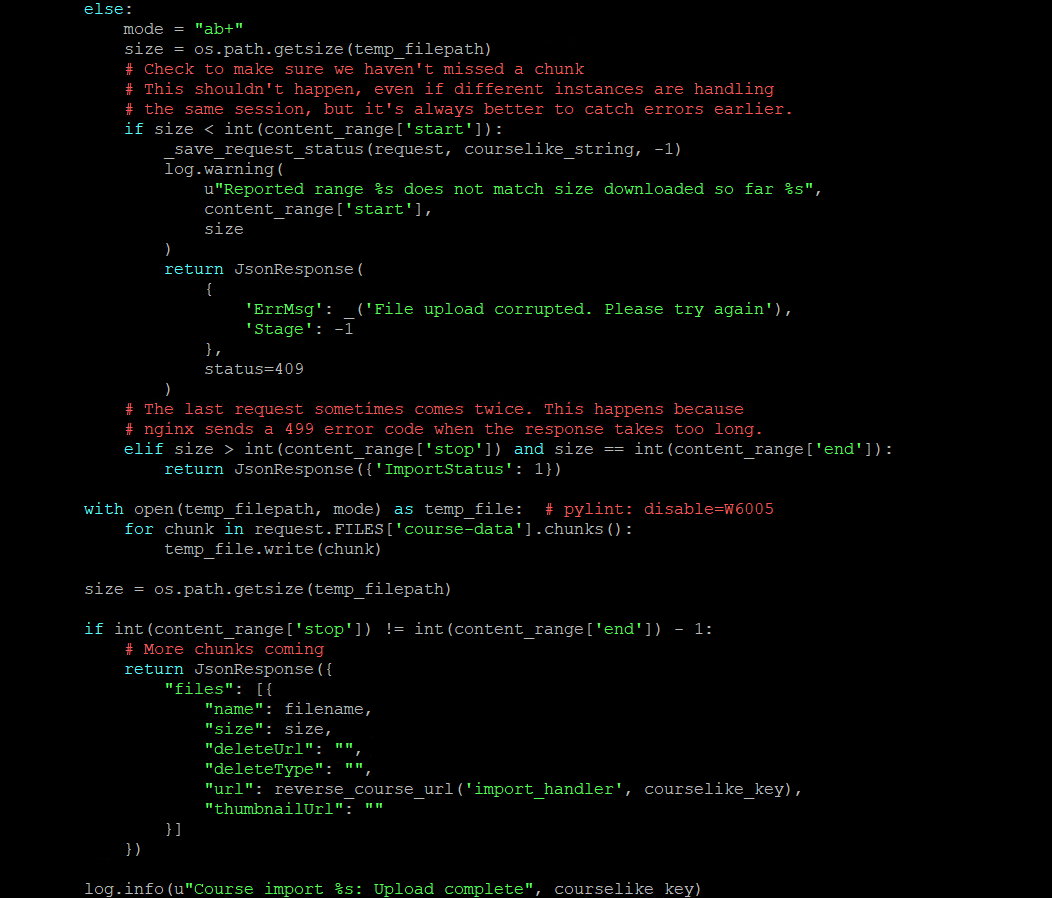

edx-platform/cms/djangoapps/contentstore/views/import_export.py (Function: _write_chunk)

Add the following piece of code in the above mentioned file:

def _write_chunk(request, courselike_key): """ Write the OLX file data chunk from the given request to the local filesystem. """ # Upload .tar.gz to local filesystem for one-server installations not using S3 or Swift data_root = path(settings.GITHUB_REPO_ROOT) subdir = base64.urlsafe_b64encode(repr(courselike_key).encode('utf-8')).decode('utf-8') course_dir = data_root / subdir filename = request.FILES['course-data'].name courselike_string = text_type(courselike_key) + filename # Do everything in a try-except block to make sure everything is properly cleaned up. try: # Use sessions to keep info about import progress _save_request_status(request, courselike_string, 0) if not filename.endswith('.tar.gz'): _save_request_status(request, courselike_string, -1) return JsonResponse( { 'ErrMsg': _('We only support uploading a .tar.gz file.'), 'Stage': -1 }, status=415 ) temp_filepath = course_dir / filename if not course_dir.isdir(): os.mkdir(course_dir) logging.debug(u'importing course to {0}'.format(temp_filepath)) # Get upload chunks byte ranges try: matches = CONTENT_RE.search(request.META["HTTP_CONTENT_RANGE"]) content_range = matches.groupdict() except KeyError: # Single chunk # no Content-Range header, so make one that will work content_range = {'start': 0, 'stop': 1, 'end': 2} # stream out the uploaded files in chunks to disk if int(content_range['start']) == 0: mode = "wb+" else: mode = "ab+" size = os.path.getsize(temp_filepath) # Check to make sure we haven't missed a chunk # This shouldn't happen, even if different instances are handling # the same session, but it's always better to catch errors earlier. if size < int(content_range['start']): _save_request_status(request, courselike_string, -1) log.warning( u"Reported range %s does not match size downloaded so far %s", content_range['start'], size ) return JsonResponse( { 'ErrMsg': _('File upload corrupted. Please try again'), 'Stage': -1 }, status=409 ) # The last request sometimes comes twice. This happens because # nginx sends a 499 error code when the response takes too long. elif size > int(content_range['stop']) and size == int(content_range['end']): return JsonResponse({'ImportStatus': 1}) with open(temp_filepath, mode) as temp_file: # pylint: disable=W6005 for chunk in request.FILES['course-data'].chunks(): temp_file.write(chunk) size = os.path.getsize(temp_filepath) if int(content_range['stop']) != int(content_range['end']) - 1: # More chunks coming return JsonResponse({ "files": [{ "name": filename, "size": size, "deleteUrl": "", "deleteType": "", "url": reverse_course_url('import_handler', courselike_key), "thumbnailUrl": "" }] }) log.info(u"Course import %s: Upload complete", courselike_key) with open(temp_filepath, 'rb') as local_file: # pylint: disable=W6005 if settings.REMOTE_FILE_STORAGE_STRING == settings.DEFAULT_FILE_STORAGE: storage_path = course_import_export_storage.save_with_file_path( u'olx_import/' + filename, temp_filepath) else: django_file = File(local_file) storage_path = course_import_export_storage.save(u'olx_import/' + filename, django_file) import_olx.delay( request.user.id, text_type(courselike_key), storage_path, filename, request.LANGUAGE_CODE) # Send errors to the client with the stage at which error occurred. except Exception as exception: # pylint: disable=broad-except _save_request_status(request, courselike_string, -1) if course_dir.isdir(): shutil.rmtree(course_dir) log.info(u"Course import %s: Temp data cleared", courselike_key) log.exception( "error importing course" ) return JsonResponse( { 'ErrMsg': str(exception), 'Stage': -1 }, status=400 ) return JsonResponse({'ImportStatus': 1})Screenshot for Reference:

Migrate Data from Local Storage to Azure Blob Storage:

Install the AzCopy on the root and unzip it using the following commands:

$ wget https://aka.ms/downloadazcopy-v10-linux

$ tar -xvf downloadazcopy-v10-linux

After that, go to the AzCopy directory and run the following commands to migrate the folders from Local Storage to Azure Blob Storage:

$ ./azcopy copy '/edx/var/edxapp/staticfiles/*' 'https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/[SAS_Token]' --recursive

$ ./azcopy copy '/edx/app/edxapp/themes/*' 'https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/[SAS_Token]' --recursive

$ ./azcopy copy '/edx/var/edxapp/media/*' 'https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME][SAS_Token]' --recursive

$ ./azcopy copy '/edx/var/edxapp/media/scorm' 'https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/studio/[SAS_Token]' --recursive

$ ./azcopy copy '/edx/var/edxapp/media/video-transcripts/*' 'https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/studio/video-transcripts/[SAS_Token]' --recursive

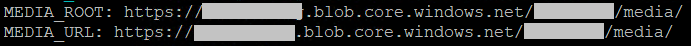

Configure MEDIA_ROOT and MEDIA_URL to Azure Blob Storage:

Replace the value for MEDIA_ROOT and MEDIA_URL with the following in the /edx/etc/lms.yml and /edx/etc/studio.yml:

MEDIA_ROOT: https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/media/

MEDIA_URL: https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/media/

Screenshot for Reference:

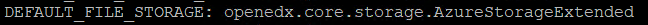

Configure DEFAULT_FILE_STORAGE to Azure Blob Storage:

Replace the value for DEFAULT_FILE_STORAGE with the following in the /edx/etc/lms.yml and /edx/etc/studio.yml:

DEFAULT_FILE_STORAGE: openedx.core.storage.AzureStorageExtended

Screenshot for Reference:

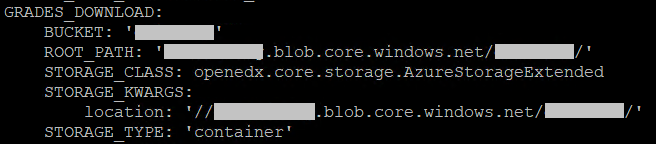

Configure GRADES_DOWNLOAD to Azure Blob Storage:

Replace the value for GRADES_DOWNLOAD with the following in the /edx/etc/lms.yml and /edx/etc/studio.yml:

GRADES_DOWNLOAD:

BUCKET: '[AZURE_BLOB_NAME]'

ROOT_PATH: '[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/'

STORAGE_CLASS: openedx.core.storage.AzureStorageExtended

STORAGE_KWARGS:

location: '//[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/'

STORAGE_TYPE: 'container'

Screenshot for Reference:

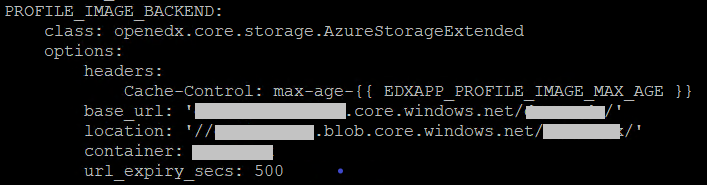

Configure PROFILE_IMAGE_BACKEND to Azure Blob Storage:

Replace the value for PROFILE_IMAGE_BACKEND with the following in the /edx/etc/lms.yml:

PROFILE_IMAGE_BACKEND:

class: openedx.core.storage.AzureStorageExtended

options:

headers:

Cache-Control: max-age-{{ EDXAPP_PROFILE_IMAGE_MAX_AGE }}

base_url: '[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/'

location: '//[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/'

container: [AZURE_BLOB_NAME]

url_expiry_secs: 500

Screenshot for Reference:

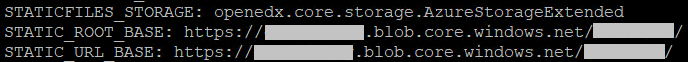

Configure Static Files to Azure Blob Storage:

Add the following key value pair in the /edx/etc/lms.yml and /edx/etc/studio.yml:

STATICFILES_STORAGE: openedx.core.storage.AzureStorageExtended

Replace the value for STATIC_ROOT_BASE and STATIC_URL_BASE with the following in the /edx/etc/lms.yml and /edx/etc/studio.yml:

STATIC_ROOT_BASE: https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/

STATIC_URL_BASE: https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/

Screenshot for Reference:

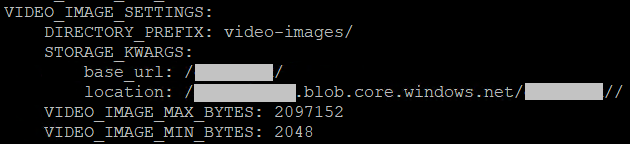

Configure VIDEO_IMAGE_SETTINGS to Azure Blob Storage:

Replace the value for VIDEO_IMAGE_SETTINGS with the following in the /edx/etc/lms.yml and /edx/etc/studio.yml:

VIDEO_IMAGE_SETTINGS:

DIRECTORY_PREFIX: video-images/

STORAGE_KWARGS:

base_url: /[AZURE_BLOB_NAME]/

location: /[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]//

VIDEO_IMAGE_MAX_BYTES: 2097152

VIDEO_IMAGE_MIN_BYTES: 2048

Screenshot for Reference:

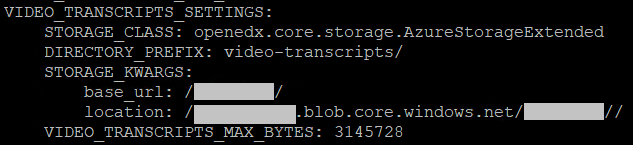

Configure VIDEO_TRANSCRIPTS_SETTINGS to Azure Blob Storage:

Replace the value for VIDEO_TRANSCRIPTS_SETTINGS with the following in the /edx/etc/lms.yml and /edx/etc/studio.yml:

VIDEO_TRANSCRIPTS_SETTINGS:

STORAGE_CLASS: openedx.core.storage.AzureStorageExtended

DIRECTORY_PREFIX: video-transcripts/

STORAGE_KWARGS:

base_url: /[AZURE_BLOB_NAME]/

location: /[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]//

VIDEO_TRANSCRIPTS_MAX_BYTES: 3145728

Screenshot for Reference:

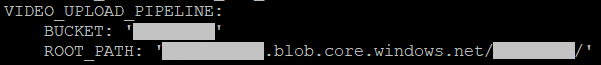

Configure VIDEO_UPLOAD_PIPELINE to Azure Blob Storage:

Replace the value for VIDEO_UPLOAD_PIPELINE with the following in the /edx/etc/lms.yml and /edx/etc/studio.yml:

VIDEO_UPLOAD_PIPELINE:

BUCKET: '[AZURE_BLOB_NAME]'

ROOT_PATH: '[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net/[AZURE_BLOB_NAME]/'

Screenshot for Reference:

Configure SCORM to Azure Blob Storage:

Create a scorm_storage function:

Add the following piece of code in the edx-platform/openedx/core/storage.py file:

def scorm_storage(xblock):

from django.conf import settings

from django.core.files.storage import get_storage_class

from openedx.core.djangoapps.site_configuration.models import SiteConfiguration

import os

# Subfolder "scorm" in the directory media i-e media/scorm

subfolder = SiteConfiguration.get_value_for_org(

xblock.location.org, "SCORM_STORAGE_NAME", "scorm/"

)

location = settings.AZURE_LOCATION + "/" if settings.AZURE_LOCATION != '' else ''

storage_location = os.path.join(settings.MEDIA_ROOT + location, subfolder)

# Checking the condition whether the remote connection is done or not.

# This would remove the issue of CORS-POLICY for the remote.

# Reverse proxy configuration is done in nginx.

if settings.REMOTE_FILE_STORAGE_STRING == settings.DEFAULT_FILE_STORAGE:

return get_storage_class(settings.DEFAULT_FILE_STORAGE)(

location=storage_location, base_url=settings.LMS_ROOT_URL + 'scorm-xblock/'

)

else:

return get_storage_class(settings.DEFAULT_FILE_STORAGE)(

location=storage_location, base_url=settings.MEDIA_URL + subfolder

)

Clone SCORM app in edx-platform:

Clone the SCORM app using the following command in the edx-platform/common/djangoapps/ directory:

$ git clone https://github.com/overhangio/openedx-scorm-xblock

Run the following command in the edx-platform/common/djangoapps/openedx-scorm-xblock/ directory (make sure you have activated the source):

$ pip install -e .

Edit the scormxblock.py file:

Edit the following functions in the edx-platform/common/djangoapps/openedx-scorm-xblock/openedxscorm/scormxblock.py file to match with the following defined functions to make the SCORM compatible with Azure Blob Storage:

Import the following module in the start of the file:

from django.conf import settingsFunction student_view:

def student_view(self, context=None): student_context = { "index_page_url": self.index_page_url, "index_page_url_with_proxy": self.index_page_url_with_proxy, "completion_status": self.lesson_status, "grade": self.get_grade(), "scorm_xblock": self, "is_local_filestorage": True if settings.DEFAULT_FILE_STORAGE == settings.LOCAL_FILE_STORAGE_STRING else False }Function index_page_url_with_proxy:

@property def index_page_url_with_proxy(self): if not self.package_meta or not self.index_page_path: return "" folder = self.extract_folder_path if self.storage.exists( os.path.join(self.extract_folder_base_path, self.index_page_path) ): # For backward-compatibility, we must handle the case when the xblock data # is stored in the base folder. folder = self.extract_folder_base_path logger.warning("Serving SCORM content from old-style path: %s", folder) # Iframe URL is being updated so it can be proxy pass to remote storage from nginx # see location ~* ^/scorm-xblock/ in /etc/nginx/sites-enabled/cms or /etc/nginx/sites-enabled/lms folder = re.sub(r"/scorm/", '/scorm-xblock/', '/' + folder) if settings.SERVICE_NAME == 'LMS': return settings.LMS_ROOT_URL + folder + '/' + self.index_page_path if settings.SERVICE_NAME == 'CMS': return settings.STUDIO_ROOT_URL + folder + '/' + self.index_page_path return self.storage.url(os.path.join(folder, self.index_page_path))Function extract_folder_path:

@property def extract_folder_path(self): """ This path needs to depend on the content of the scorm package. Otherwise, served media files might become stale when the package is updated. """ url_main= os.path.join(self.extract_folder_base_path, self.package_meta["sha1"]) return url_mainFunction update_package_fields:

def update_package_fields(self): """ Update version and index page path fields. """ # Checking the condition if the default file storage is local or not. # This condition would find imsmanifest.xml file accordingly. if settings.DEFAULT_FILE_STORAGE == settings.REMOTE_FILE_STORAGE_STRING: imsmanifest_path = self.find_manifest_file_path("/imsmanifest.xml") else: imsmanifest_path = self.find_file_path("imsmanifest.xml") imsmanifest_file = self.storage.open(imsmanifest_path) tree = ET.parse(imsmanifest_file) imsmanifest_file.seek(0) namespace = "" for _, node in ET.iterparse(imsmanifest_file, events=["start-ns"]): if node[0] == "": namespace = node[1] break root = tree.getroot() prefix = "{" + namespace + "}" if namespace else "" resource = root.find( "{prefix}resources/{prefix}resource[@href]".format(prefix=prefix) ) schemaversion = root.find( "{prefix}metadata/{prefix}schemaversion".format(prefix=prefix) ) if resource is not None: self.index_page_path = resource.get("href") else: self.index_page_path = self.find_relative_file_path("index.html") if (schemaversion is not None) and ( re.match("^1.2$", schemaversion.text) is None ): self.scorm_version = "SCORM_2004" else: self.scorm_version = "SCORM_12"Create Function find_manifest_file_path:

def find_manifest_file_path(self, filename): path = self.get_manifest_file_path(filename, self.extract_folder_path) if path is None: raise ScormError( "Invalid package: could not find '{}' file".format(filename) ) return pathCreate Function get_manifest_file_path:

def get_manifest_file_path(self, filename, root): subfolders, files = self.storage.listdir(root) for f in files: f = f.split(settings.AZURE_LOCATION + "/")[1] logging.info(f) if f == filename: file_path = root + filename return file_path for subfolder in subfolders: path = self.get_file_path(filename, os.path.join(root, subfolder)) if path is not None: return path return NoneFunction get_file_path:

def get_file_path(self, filename, root): """ Same as `find_file_path`, but don't raise errors on files not found. """ subfolders, files = self.storage.listdir(root) for f in files: # File path is set here for the Remote. If it is not linked, it will set the local media file path. if f == filename: file_path = root + filename if settings.REMOTE_FILE_STORAGE_STRING == settings.DEFAULT_FILE_STORAGE: return file_path else: return os.path.join(root, filename) for subfolder in subfolders: path = self.get_file_path(filename, os.path.join(root, subfolder)) if path is not None: return path return NoneFunction scorm_location:

def scorm_location(self): """ Unzipped files will be stored in a media folder with this name, and thus accessible at a url with that also includes this name. """ # checking the condition if the default file storage is local or remote. # If it is local, the additional "scorm" directory will not be added. # If the condition is not made than the src would be "/media/scorm/scorm" # For the remote, "scorm" directory needs to be added. if settings.REMOTE_FILE_STORAGE_STRING == settings.DEFAULT_FILE_STORAGE: default_scorm_location = "scorm" return self.xblock_settings.get("LOCATION", default_scorm_location) else: return ""

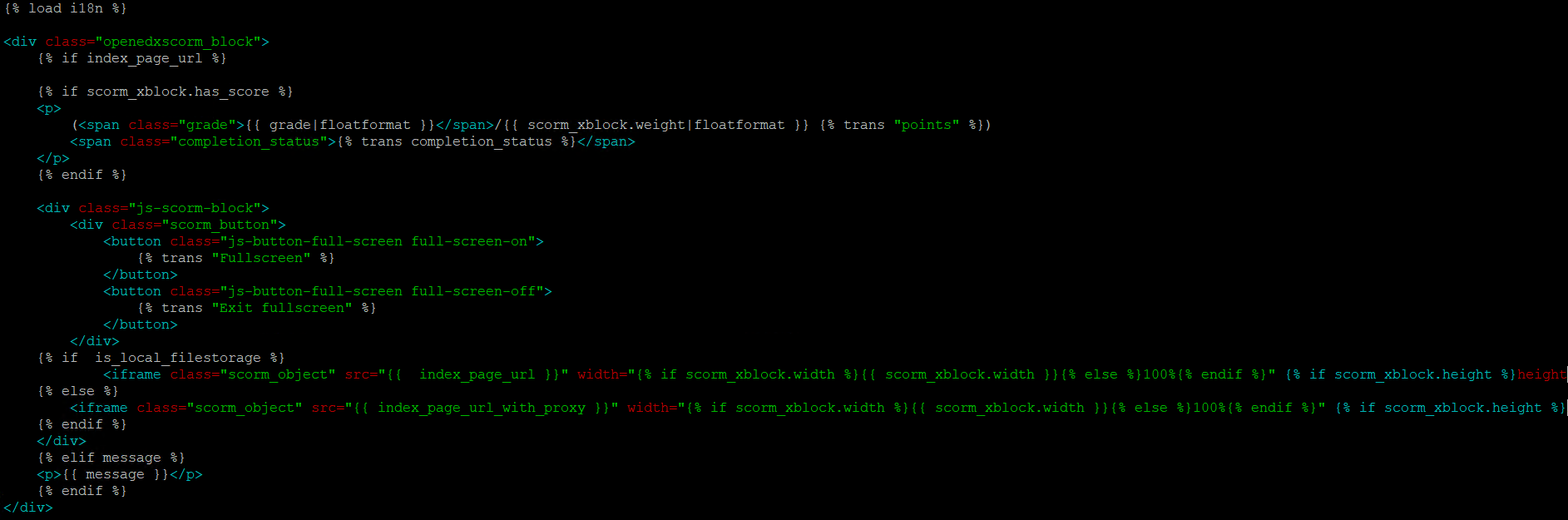

Edit the scormxblock.html file:

Edit the scormxblock.html file in the edx-platform/common/djangoapps/openedx-scorm-xblock/openedxscorm/static/html/ directory to match the following:

{% if is_local_filestorage %}

<iframe class="scorm_object" src="{{ index_page_url }}" width="{% if scorm_xblock.width %}{{ scorm_xblock.width }}{% else %}100%{% endif %}" {% if scorm_xblock.height %}height="{{ scorm_xblock.height }}" {% endif %}></iframe>

{% else %}

<iframe class="scorm_object" src="{{ index_page_url_with_proxy }}" width="{% if scorm_xblock.width %}{{ scorm_xblock.width }}{% else %}100%{% endif %}" {% if scorm_xblock.height %}height="{{ scorm_xblock.height }}" {% endif %}></iframe>

{% endif %}

Screenshot for Reference:

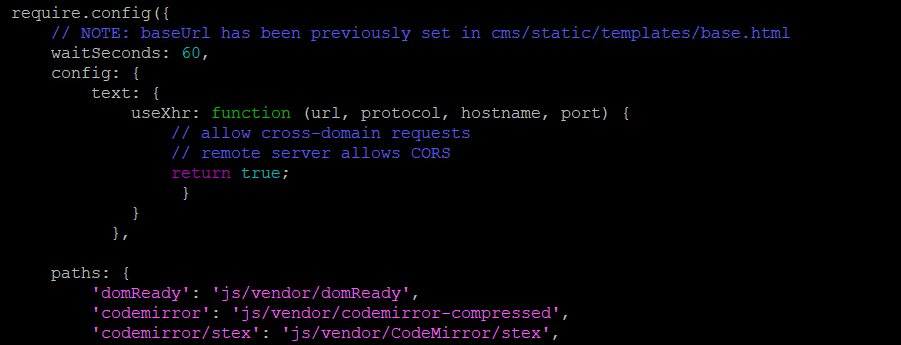

Edit the require-config.js file:

Edit the edx-platform/cms/static/cms/js/require-config.js file’s require.config function’s config to match the following:

config: {

text: {

useXhr: function (url, protocol, hostname, port) {

// allow cross-domain requests

// remote server allows CORS

return true;

}

}

},

Screenshot for Reference:

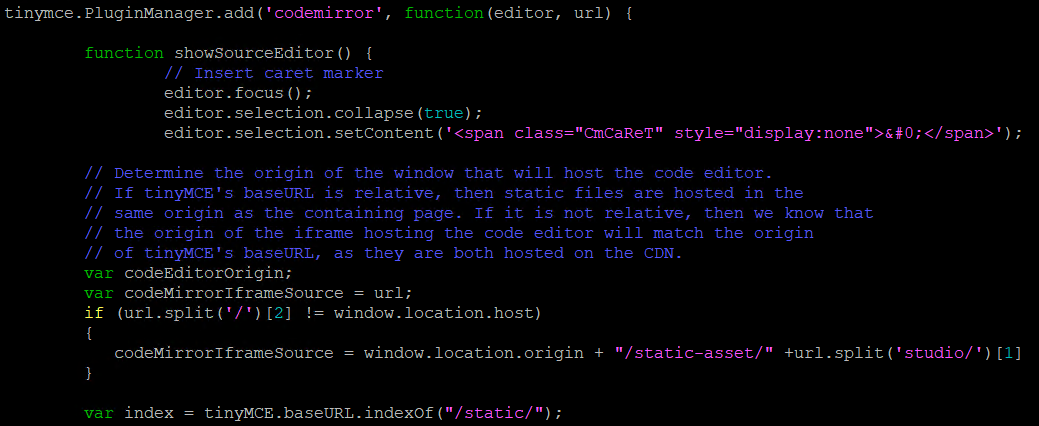

Edit the plugin.js file:

Edit the edx-platform/common/static/js/vendor/tinymce/js/tinymce/plugins/codemirror/plugin.js file’s showSourceEditor function to contain the following code:

var codeMirrorIframeSource = url;

if (url.split('/')[2] != window.location.host)

{

codeMirrorIframeSource = window.location.origin + "/static-asset/" +url.split('studio/')[1]

}

Screenshot for Reference:

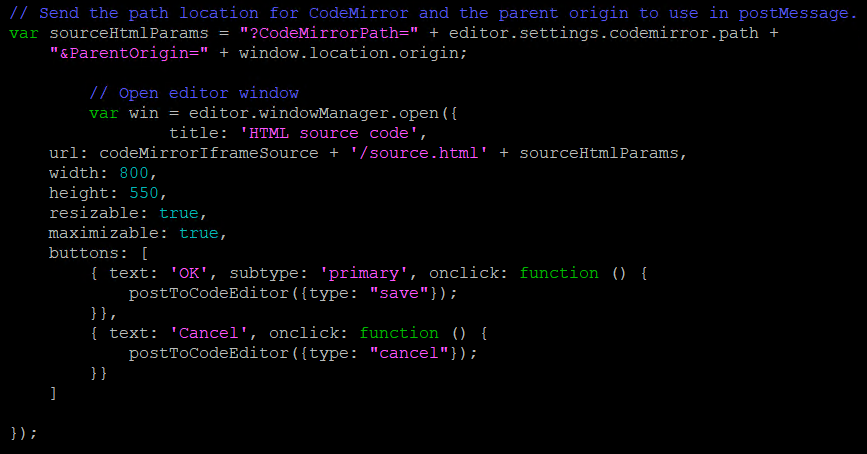

Also edit the editor.windowManager.open function to contain the following value for url variable:

url: codeMirrorIframeSource + '/source.html' + sourceHtmlParams,

Screenshot for Reference:

Edit lms.yml and studio.yml files:

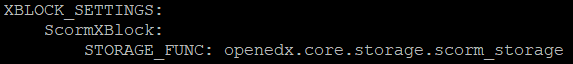

Replace the value for XBLOCK_SETTINGS' ScormXBlock with the following in the /edx/etc/lms.yml and /edx/etc/studio.yml:

XBLOCK_SETTINGS:

ScormXBlock:

STORAGE_FUNC: openedx.core.storage.scorm_storage

Screenshot for Reference:

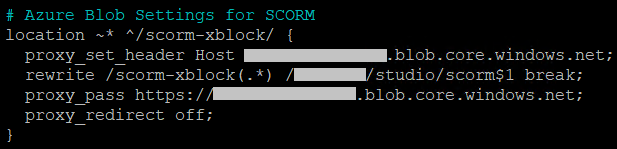

Edit Nginx files:

Edit the /etc/nginx/sites-enabled/lms file to contain the following piece of code to avoid CORS error:

# Azure Blob Settings for SCORM

location ~* ^/scorm-xblock/ {

proxy_set_header Host [AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net;

rewrite /scorm-xblock(.*) /[AZURE_BLOB_NAME]/studio/scorm$1 break;

proxy_pass https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net;

proxy_redirect off;

}

Screenshot for Reference:

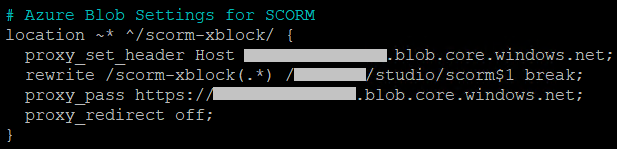

Edit the /etc/nginx/sites-enabled/cms files to contain the following piece of code:

# Azure Blob Settings for SCORM

location ~* ^/scorm-xblock/ {

proxy_set_header Host [AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net;

rewrite /scorm-xblock(.*) /[AZURE_BLOB_NAME]/studio/scorm$1 break;

proxy_pass https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net;

proxy_redirect off;

#proxy_pass_header Content-Type;

}

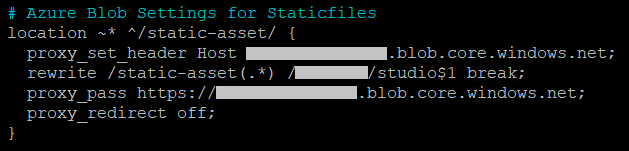

# Azure Blob Settings for Staticfiles

location ~* ^/static-asset/ {

proxy_set_header Host [AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net;

rewrite /static-asset(.*) /[AZURE_BLOB_NAME]/studio$1 break;

proxy_pass https://[AZURE_STORAGE_ACCOUNT_NAME].blob.core.windows.net;

proxy_redirect off;

}

Screenshot for Reference:

Upgrade django-pipeline:

Check the django-pipeline version and if it is less than 2.0.4 then upgrade the package to the 2.0.4 version:

$ pip install django-pipeline==2.0.4

Restart, Update Assets, and Collectstatic:

After configuring all the settings restart all the services using the following command:

$ /edx/bin/supervisorctl restart all

$ sudo service restart nginx

Then run the following command to update assets (make sure source is activated):

$ paver update_assets --settings=production

Then run the following commands to collectstatic (make sure source is activated):

$ python manage.py lms --settings=production collectstatic --noinput

$ python manage.py cms --settings=production collectstatic --noinput